Automated Creation of Digital Cousins for Robust Policy Learning

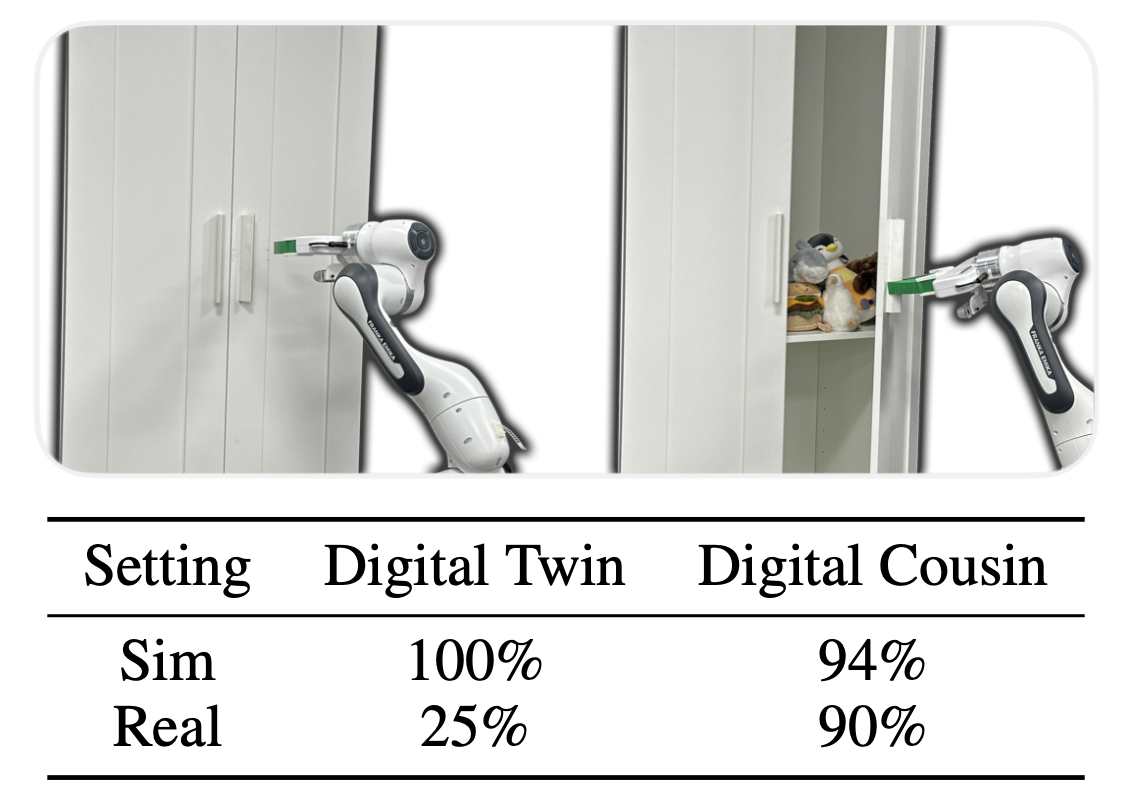

A policy trained in simulation with digital cousins can successfully transfer to the real world and open a cabinet.

A policy trained in simulation with digital cousins can successfully transfer to the real world and open a cabinet.

Training robot policies in the real world can be unsafe, costly, and difficult to scale. Simulation serves as an inexpensive and potentially limitless source of training data, but suffers from the semantics and physics disparity between simulated and real-world environments. These discrepancies can be minimized by training in digital twins, which serve as virtual replicas of a real scene but are expensive to generate and cannot produce cross-domain generalization. To address these limitations, we propose the concept of digital cousins, a virtual asset or scene that, unlike a digital twin, does not explicitly model a real-world counterpart but still exhibits similar geometric and semantic affordances. As a result, digital cousins simultaneously reduce the cost of generating an analogous virtual environment while also facilitating better generalization across domains by providing a distribution of similar training scenes. Leveraging digital cousins, we introduce a novel method for the Automatic Creation of Digital Cousins (ACDC), and propose a fully automated real-to-sim-to-real pipeline for generating fully interactive scenes and training robot policies that can be deployed zero-shot in the original scene. We find that ACDC can produce digital cousin scenes that preserve geometric and semantic affordances, and can be used to train policies that outperform policies trained on digital twins, achieving 90% vs. 25% under zero-shot sim-to-real transfer.

ACDC is composed of three sequential steps. (1) First, relevant per-object information is extracted the input RGB image. (2) Next, we use this information with an asset dataset to match digital cousins to each detected input object. (3) Finally, we post-process the chosen digital cousins and generate a fully-interactive simulated scene.

We answer the following research questions through experiments:

Q1: Can ACDC produce high-quality digital cousin scenes? Given a single RGB image, can ACDC capture

the high-level semantic and spatial details inherent in the original scene?

Q2: Can policies trained on digital cousins match the performance of policies trained on a digital

twin when evaluated on the original setup?

Q3: Do policies trained on digital cousins exhibit better robustness compared to policies trained on

a digital twin when evaluated on the out-of-distribution setups?

Q4: Do policies trained on digital cousins enable zero-shot sim-to-real policy transfer?

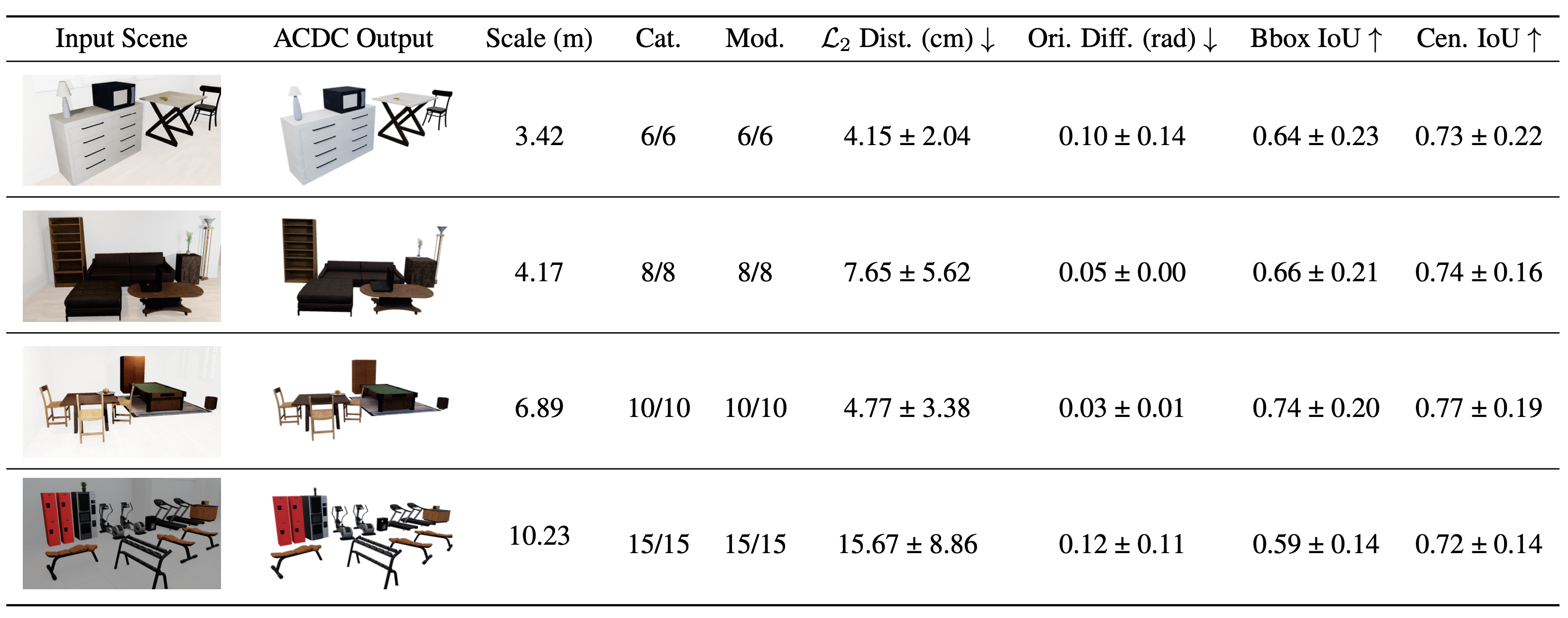

Quantitative and qualitative evaluation of scene reconstruction by ACDC in a sim-to-sim scenario. "Scale" is the largest distance between two objects' bounding boxes in the input scene. "Cat." indicates the ratio of correctly categorized objects to the total number of objects in the scene. "Mod." shows the ratio of correctly modeled objects to the total number of objects in the scene. "L2 Dist." provides the mean and standard deviation of the Euclidean distance between the centers of the bounding boxes in the input and reconstructed scenes. "Ori. Diff." represents the mean and standard deviation of the orientation magnitude difference of each centrosymmetric object. "Bbox IoU" presents the Intersection over Union (IoU) for assets' 3D bounding boxes.

Qualitative ACDC real-to-sim scene reconstruction results. Multiple cousins are shown for a given scene.

Based on these results, we can safely answer Q1: ACDC can indeed preserve semantic and spatial details of input scenes, generating cousins of real-world objects from a single RGB image that can be accurately positioned and scaled to match the original scene.

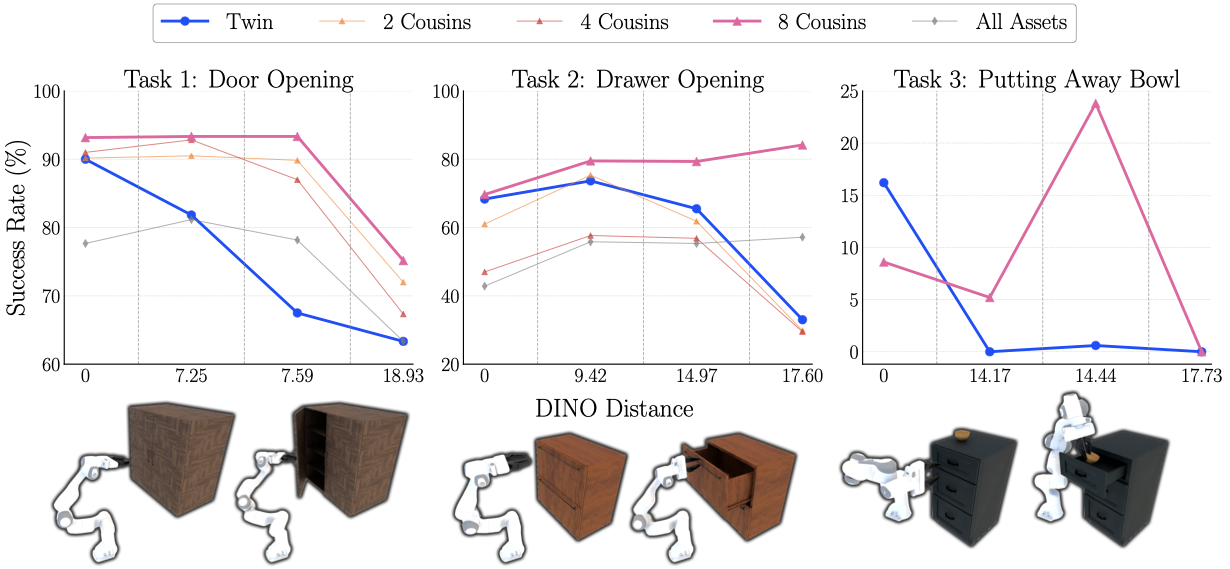

Sim-to-sim policy results. Aggregated success rates of policies trained on the exact twin, different numbers of cousins, and all assets in the three nearest categories. Policies are tested on four setups: the exact digital twin, and three increasingly dissimilar setups as measured by DINOv2 embedding distance to probe zero-shot generalization. Note for Task 3, there are much fewer cabinet models that enable the task to be feasible, so we only compare the digital-twin and 8-cousin policies.

Zero-shot real-world evaluation of digital twin vs. digital cousin policies. Task is Door Opening on an IKEA cabinet. Metric is success rate: sim/real results averaged over 50/20 trials.

Fully automated digital cousin generation. No cut videos of ACDC being executed fully automatically, generating multiple digital cousins for real kitchen scenes. Axis-aligned bounding boxes at the end of ACDC Step 1 are visualized without speeding up.

Zero-shot sim-to-real policy transfer. A sim policy exclusively trained from four digital cousins generated above can transfer in zero-shot to the corresponding real kitchen scene.

Based on these results, we can safely answer Q2, Q3, and Q4: Policies trained using digital cousins exhibit comparable in-distribution and more robust out-of-distribution performance compared to policies trained on digital twins, and can enable zero-shot sim-to-real policy transfer.

Several failure cases. e.g.: failing to move towards the handle, missing the handle, handle slippage.

ACDC is a fully automated pipeline used to quickly generate fully interactive digital cousin scenes corresponding to a single real-world RGB image. We find that policies trained on these digital cousin setups are more robust than those trained on digital twins, with comparable in-domain performance and superior out-of-domain generalization, and enable zero-shot sim-to-real policy transfer.